Nuclear fusion, the same kind of energy that fuels stars, could one day power our world with abundant, safe, and carbon-free energy. Aided by supercomputers Summit at the US Department of Energy’s (DOE’s) Oak Ridge National Laboratory (ORNL) and Theta at DOE’s Argonne National Laboratory (ANL), a team of scientists strives toward making fusion energy a reality.

Fusion reactions involve two or more atomic nuclei combining to form different nuclei and particles, converting some of the atomic mass into energy in the process. Scientists are working toward building a nuclear fusion reactor that could efficiently produce heat that would then be used to generate electricity. However, confining plasma reactions that occur at temperatures hotter than the sun is very difficult, and the engineers who design these massive machines can’t afford mistakes.

To ensure the success of future fusion devices—such as ITER, which is being built in southern France—scientists can take data from experiments performed on smaller fusion devices and combine them with massive computer simulations to understand the requirements of new machines. ITER will be the world’s largest tokamak, or device that uses magnetic fields to confine plasma particles in the shape of a donut inside, and will produce 500 megawatts (MW) of fusion power from only 50 MW of input heating power.

One of the most important requirements for fusion reactors is the tokamak’s divertor, a material structure engineered to remove exhaust heat from the reactor’s vacuum vessel. The heat-load width of the divertor is the width along the reactor’s inner walls that will sustain repeated hot exhaust particles coming in contact with it.

A team led by C.S. Chang at Princeton Plasma Physics Laboratory (PPPL) has used the Oak Ridge Leadership Computing Facility’s (OLCF’s) 200-petaflop Summit and Argonne Leadership Computing Facility’s (ALCF’s) 11.7-petaflop Theta supercomputers, together with a supervised machine learning program called Eureqa, to find a new extrapolation formula from existing tokamak data to future ITER based on simulations from their XGC computational code for modeling tokamak plasmas. The team then completed new simulations that confirm their previous ones, which showed that at full power, ITER’s divertor heat-load width would be more than six times wider than was expected in the current trend of tokamaks. The results were published in Physics of Plasmas.

Find your dream job in the space industry. Check our Space Job Board »

“In building any fusion reactor in the future, predicting the heat-load width is going to be critical to ensuring the divertor material maintains its integrity when faced with this exhaust heat,” Chang said. “When the divertor material loses its integrity, the sputtered metallic particles contaminate the plasma and stop the burn or even cause sudden instability. These simulations give us hope that ITER operation might be easier than was initially thought.”

Using Eureqa, the team found hidden parameters that provided a new formula that not only fits the drastic increase predicted for ITER’s heat-load width at full power but also produced the same results as previous experimental and simulation data for existing tokamaks. Among the devices newly included in the study were the Alcator C-Mod, a tokamak at the Massachusetts Institute of Technology (MIT) that holds the record for plasma pressure in a magnetically confined fusion device, and the world’s largest existing tokamak, the JET (Joint European Torus) in the United Kingdom.

“If this formula is validated experimentally, this will be huge for the fusion community and for ensuring that ITER’s divertor can accommodate the heat exhaust from the plasma without too much complication,” Chang said.

ITER deviates from the trend

The Chang team’s work studying ITER’s divertor plates began in 2017 when the group reproduced experimental divertor heat-load width results from three US fusion devices on the OLCF’s former Titan supercomputer: General Atomics’ DIII-D toroidal magnetic fusion device, which has an aspect ratio similar to ITER; MIT’s Alcator C-Mod; and the National Spherical Torus Experiment, a compact low-aspect-ratio spherical tokamak at PPPL. The presence of steady “blobby”-shaped turbulence at the edge of the plasma in these tokamaks did not play a significant role in widening the divertor heat-load width.

The researchers then set out to prove that their XGC code, which simulates particle movements and electromagnetic fields in plasma, could predict the heat-load width on the full-power ITER’s divertor surface. The presence of dynamic edge turbulence—different from the steady blobby-shaped turbulence present in the current tokamak edge—could significantly widen the distribution of the exhaust heat, they realized. If ITER were to follow the current trend of heat-load widths in present-day fusion devices, its heat-load width would be less than a few centimeters—a dangerously narrow width, even for divertor plates made of tungsten, which boasts the highest melting point of all pure metals.

The team’s simulations on Titan in 2017 revealed an unusual jump in the trend—the full-power ITER showed a heat-load width more than six times wider than what the existing tokamaks implied. But the extraordinary finding required more investigation. How could the full-power ITER’s heat-load width deviate so significantly from existing tokamaks?

Scientists operating the C-Mod tokamak at MIT cranked the device’s magnetic field up to ITER value for the strength of the poloidal magnetic field, which runs top to bottom to confine the donut-shaped plasma inside the reaction chamber. The other field used in tokamak reactors, the toroidal magnetic field, runs around the circumference of the donut. Combined, these two magnetic fields confine the plasma, as if winding a tight string around a donut, creating looping motions of ions along the combined magnetic field lines called gyromotions that researchers believe might smooth out turbulence in the plasma.

Scientists at MIT then provided Chang with experimental data from the Alcator C-Mod against which his team could compare results from simulations by using XGC. With an allocation of time under the INCITE (Innovative and Novel Computational Impact on Theory and Experiment) program, the team performed extreme-scale simulations on Summit by employing the new Alcator C-Mod data using a finer grid and including a greater number of particles.

“They gave us their data, and our code still agreed with the experiment, showing a much narrower divertor heat-load width than the full-power ITER,” Chang said. “What that meant was that either our code produced a wrong result in the earlier full-power ITER simulation on Titan or there was a hidden parameter that we needed to account for in the prediction formula.”

Machine learning reveals a new formula

Chang suspected that the hidden parameter might be the radius of the gyromotions, called the gyroradius, divided by the size of the machine. Chang then fed the new results to a machine learning program called Eureqa, presently owned by DataRobot, asking it to find the hidden parameter and a new formula for the ITER prediction. The program spit out several new formulas, verifying the gyroradius divided by the machine size as being the hidden parameter. The simplest of these formulas most agreed with the physics insights.

Chang presented the findings at various international conferences last year. He was then given three more simulation cases from ITER headquarters to test the new formula. The simplest formula successfully passed the test. PPPL research staff physicists Seung-Hoe Ku and Robert Hager employed the Summit and the Theta supercomputers for these three critically important ITER test simulations. Summit is located at the OLCF, a DOE Office of Science User Facility at ORNL. Theta is located at ALCF, another DOE Office of Science User Facility, located at ANL.

In an exciting finding, the new formula predicted the same results as the present experimental data—a huge jump in the full-power ITER’s heat-load width, with the medium-power ITER landing in between.

“Verifying whether ITER operation is going to be difficult due to an excessively narrow divertor heat-load width was something the entire fusion community has been concerned about, and we now have hope that ITER might be much easier to operate,” Chang said. “If this formula is correct, design engineers would be able to use it in their design for more economical fusion reactors.”

A big data problem

Each of the team’s ITER simulations consisted of 2 trillion particles and more than 1,000 time steps, requiring most of the Summit machine and one full day or longer to complete. The data generated by one simulation, Chang said, could total a whopping 200 petabytes, eating up nearly all of Summit’s file system storage.

“Summit’s file system only holds 250 petabytes’ worth of data for all the users,” Chang said. “There is no way to get all this data out to the file system, and we usually have to write out some parts of the physics data every 10 or more time steps.”

This has proven challenging for the team, who often found new science in the data that was not saved in the first simulation.

“I would often tell Dr. Ku, “I wish to see this data because it looks like we could find something interesting there,” only to discover that he could not save it,” Chang said. “We need reliable, large-compression-ratio data reduction technologies, so that’s something we are working on and are hopeful to be able to take advantage of in the future.”

Chang added that staff members at both the OLCF and ALCF were critical to the team’s ability to run codes on the centers’ massive high-performance computing systems.

“Help rendered by the OLCF and ALCF computer center staff—especially from the liaisons—has been essential in enabling these extreme-scale simulations,” Chang said.

The team is anxiously awaiting the arrival of two of DOE’s upcoming exascale supercomputers, the OLCF’s Frontier and ALCF’s Aurora, machines that will be capable of a billion billion calculations per second, or 1018 calculations per second. The team will next include more complex physics, such as electromagnetic turbulence in a more refined grid with a greater number of particles, to verify the new formula’s fidelity further and improve its accuracy. The team also plans to collaborate with experimentalists to design experiments to further validate the electromagnetic turbulence results that will be obtained on Summit or Frontier.

“Constructing a New Predictive Scaling Formula for ITER’s Divertor Heat-Load Width Informed by a Simulation-Anchored Machine Learning” is published in Physics of Plasmas.

Provided by: Oak Ridge National Laboratory

More information: C. S. Chang et al. Constructing a new predictive scaling formula for ITER’s divertor heat-load width informed by a simulation-anchored machine learning. Physics of Plasmas (2021). DOI: 10.1063/5.0027637

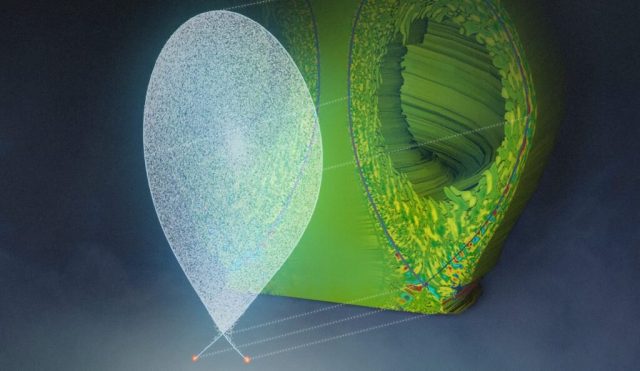

Image: At left, ions being lost from the confined plasma and following the magnetic field lines to the material diverter plates in the gyrokinetic simulation code XGC1. At right, an XGC1 simulation of edge turbulence in DIII-D plasma, showing the plasma turbulence changing the eddy structure to isolated blobs (represented by red color) in the vicinity of the magnetic separatrix (black line).

Credit: Kwan-Liu Ma’s research group, University of California Davis; David Pugmire and Adam Malin, ORNL